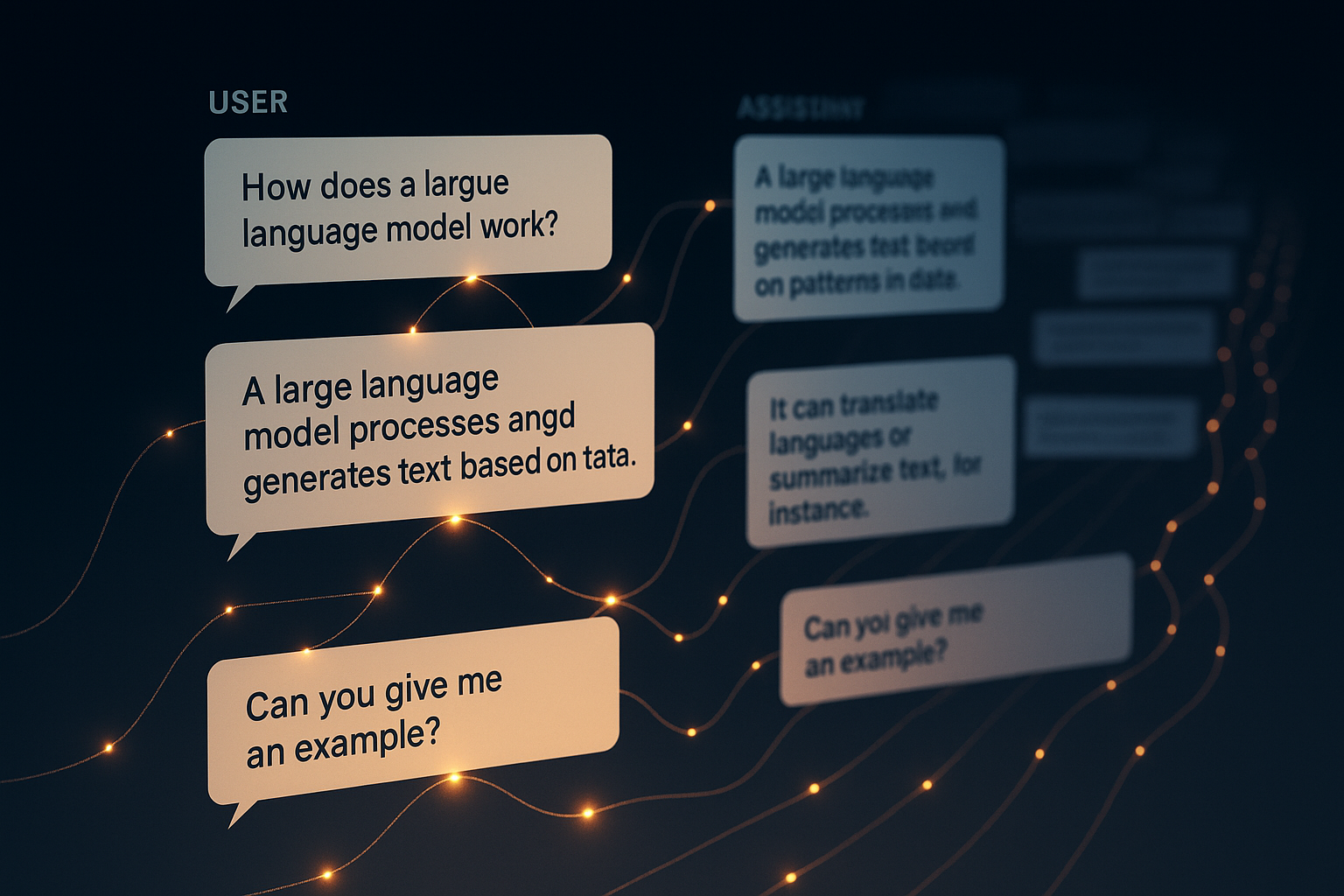

When you have a long conversation with a large language model (LLM) such as ChatGPT or Claude , it feels like the model remembers everything you’ve discussed. It references earlier points, maintains consistent context, and seems to “know” what you talked about pages ago.

But here’s the uncomfortable truth: the model doesn’t remember anything. It’s not storing your conversation in memory the way a database would. Instead, it’s rereading the entire conversation from the beginning every single time you send a message.

“A context window isn’t memory. It’s a performance where the model rereads its lines before every response.”